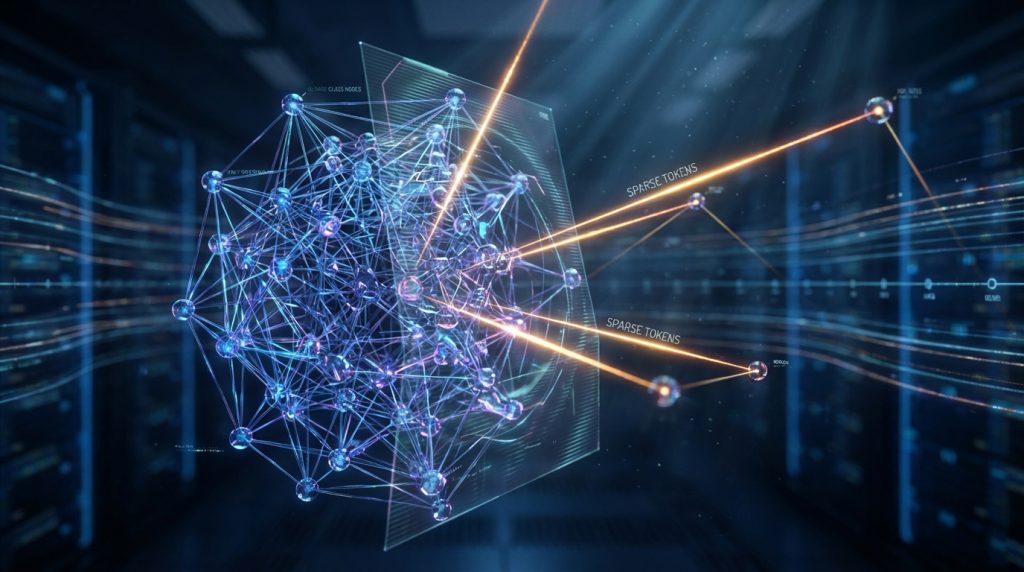

Cost-Effective Video AI: The Sparse Token Advantage

Published on Tháng 1 23, 2026 by Admin

Executive Summary: As a budget-conscious CTO, you constantly seek innovation that doesn’t inflate your cloud computing bill. AI video generation is powerful but notoriously expensive. This article introduces sparse token models as a cost-effective solution. By intelligently focusing computational power only on essential visual data, this method dramatically cuts costs and accelerates video creation, offering a strategic edge for scaling video features affordably.

The Crushing Cost of Conventional AI Video

Generative AI is transforming industries. However, for many CTOs, the excitement is tempered by the reality of massive computational costs. Video generation models, in particular, are among the most resource-intensive AI applications available today. They demand immense GPU power to create each and every frame.

Consequently, this high cost creates a significant barrier. It can make large-scale video projects, like personalized marketing or dynamic content creation, financially impractical. Your teams might have brilliant ideas, but the budget simply cannot support the required compute hours. This is a common challenge for leaders trying to slash LLM costs and manage AI expenditures effectively.

Understanding Tokens in AI Video

To grasp the solution, we first need to understand the problem. AI models don’t “see” images or video like humans do. Instead, they break down visual information into small pieces called “tokens.” A token can represent a patch of an image, a sliver of a sound, or a word in a sentence.

In traditional video generation, models use a “dense” approach. This means they process every single token for every single frame. Imagine an artist painting every pixel on a canvas with the same level of detail, even the parts that are just a solid blue sky. It’s thorough, but incredibly inefficient.

Enter Sparse Tokens: A Smarter Approach

Sparse tokenization offers a revolutionary alternative. Instead of processing everything, sparse models are trained to identify and focus only on the most important tokens. These are the parts of the video that contain the most critical information or are actively changing.

As a result, the model can ignore redundant or static background elements. It intelligently allocates its computational power where it matters most. This is the core principle that unlocks massive efficiency gains for your team and your budget.

How Sparse Tokens Slash Computational Costs

The financial benefit of sparse tokens is direct and significant. By reducing the number of tokens processed per frame, you drastically cut down on the required computations. Fewer computations mean less GPU time is needed for each video generated.

For example, a model might determine that only 20% of the tokens in a frame are essential for generating the next one. Therefore, it can skip processing the other 80%. This leads to a direct reduction in your cloud spending and makes your AI budget stretch much further. The operational overhead plummets, allowing you to do more with less.

The Speed Advantage: Faster Iteration and Deployment

Cost savings are not the only advantage. A major benefit is also a dramatic increase in generation speed. Because the model has less work to do, it can produce videos much faster than its dense counterparts. This acceleration has profound business implications.

Your product teams can iterate on new video features more quickly. Your marketing department can generate campaign assets in hours, not days. This speed translates into a more agile and responsive organization, capable of capitalizing on opportunities without being bogged down by slow rendering times.

Practical Applications for Budget-Conscious Teams

The theory behind sparse tokens is compelling, but its practical applications are where the true value lies for a CTO. This technology is not just an academic exercise; it’s a tool for building real-world, cost-effective products.

Here are a few ways your teams can leverage this efficiency:

- Personalized Content at Scale: Generate unique video messages for thousands of users without an astronomical budget.

- Synthetic Data Creation: Quickly create vast datasets of synthetic video to train other machine learning models more cheaply.

- Rapid Prototyping: Test new video-based product ideas or ad creatives without committing significant financial resources.

- Dynamic In-App Previews: Offer users dynamic video previews of features or content, enhancing user experience.

Maximizing Token Efficiency

Ultimately, the goal is to get the most value out of every token you process. Sparse modeling is a powerful technique in this domain. By focusing on what’s essential, you are inherently boosting your return on investment for every dollar spent on compute.

This approach aligns perfectly with a broader strategy of maximizing token efficiency in neural video synthesis. It’s about working smarter, not just harder, to achieve your technical and business objectives.

Key Considerations for Implementation

Adopting sparse token models requires some strategic thought. While the benefits are clear, it’s important to approach implementation with a clear understanding of the trade-offs.

Balancing Cost, Speed, and Quality

The primary consideration is the balance between efficiency and visual fidelity. In some cases, an extremely “sparse” model might produce videos with slightly lower quality than a dense model. The key is to find the sweet spot that meets your product’s quality bar while maximizing cost savings.

For many applications, such as social media content or quick previews, the highest possible fidelity is not necessary. Therefore, the cost and speed benefits of sparse tokens far outweigh any minor drop in quality. It is crucial to test and determine the right balance for each specific use case.

Choosing the Right Models and Frameworks

The field of generative AI is evolving rapidly. New models and frameworks that leverage sparsity are continuously being released. Your engineering team will need to stay informed about the latest developments to choose the best tools for the job.

Look for models that are explicitly designed for efficient inference. Pay attention to open-source projects and research papers that focus on token reduction and conditional computation. Partnering with the right AI platform or leveraging these advanced models can significantly simplify the implementation process.

The Future is Efficient Video

As AI video becomes more integrated into digital products, managing its cost will become a top priority for every CTO. Relying on brute-force, dense computation is not a sustainable long-term strategy. It’s too expensive and too slow.

Sparse tokenization represents the future of scalable and cost-effective video generation. By adopting this technology now, you can build a significant competitive advantage, enabling your company to innovate faster and more affordably than ever before.

Frequently Asked Questions

What is the main difference between sparse and dense tokens in video?

The main difference is focus. Dense token models process every single piece of visual information in a frame. In contrast, sparse token models intelligently identify and process only the most important pieces, such as moving objects, while ignoring static or redundant parts like a clear sky or a background wall. This makes them much more efficient.

Will using sparse tokens reduce my video quality?

There can be a trade-off, but it’s often negligible for many use cases. While a dense model might produce slightly higher fidelity, a well-tuned sparse model delivers excellent quality that is more than sufficient for applications like marketing content, social media videos, and personalized messages. The key is to find the right balance between cost savings and your specific quality requirements.

Is this technology difficult to implement for my team?

The complexity is decreasing. While it once required deep research expertise, many new open-source models and AI platforms are making sparse tokenization more accessible. Your team can often leverage pre-trained models designed for efficient inference, which significantly reduces the implementation burden. The focus shifts from building from scratch to fine-tuning and integrating existing solutions.

What are the best use cases for sparse token video generation?

Sparse token generation excels in high-volume, cost-sensitive applications. For example, it’s ideal for creating thousands of personalized video ads, generating synthetic data for AI training, rapid prototyping of video concepts, and creating dynamic previews within an app. Any scenario where speed and budget are critical concerns is a great fit.

How much can I realistically save with this method?

Savings can be substantial, often ranging from 50% to 80% or even more in computational costs compared to dense models. The exact amount depends on the specific model, the content of the video, and how aggressively “sparse” the processing is. However, the reduction in required GPU time is almost always significant, leading to a direct and positive impact on your cloud bill.

“`