Architecting the Future of Multimodal Token Orchestration

Published on Tháng 1 23, 2026 by Admin

The world of artificial intelligence is no longer silent or static. Instead, it is becoming a rich tapestry of text, images, audio, and video. For Machine Learning Architects, this multimodal shift presents a monumental challenge. Consequently, mastering the future of multimodal token orchestration is now essential for building next-generation AI systems.

This article explores the core concepts, current challenges, and future strategies for orchestrating tokens across different data types. We will cover the practical implications for architects and provide a roadmap for designing robust, efficient, and coherent multimodal models. Ultimately, this is your guide to navigating the next frontier of AI development.

What is Multimodal Token Orchestration?

To begin, let’s break down this complex topic into simple parts. Multimodal AI systems understand and process information from multiple sources at once. For instance, they can watch a video, listen to the audio, and read the subtitles simultaneously. Each of these data streams is broken down into fundamental units called “tokens.”

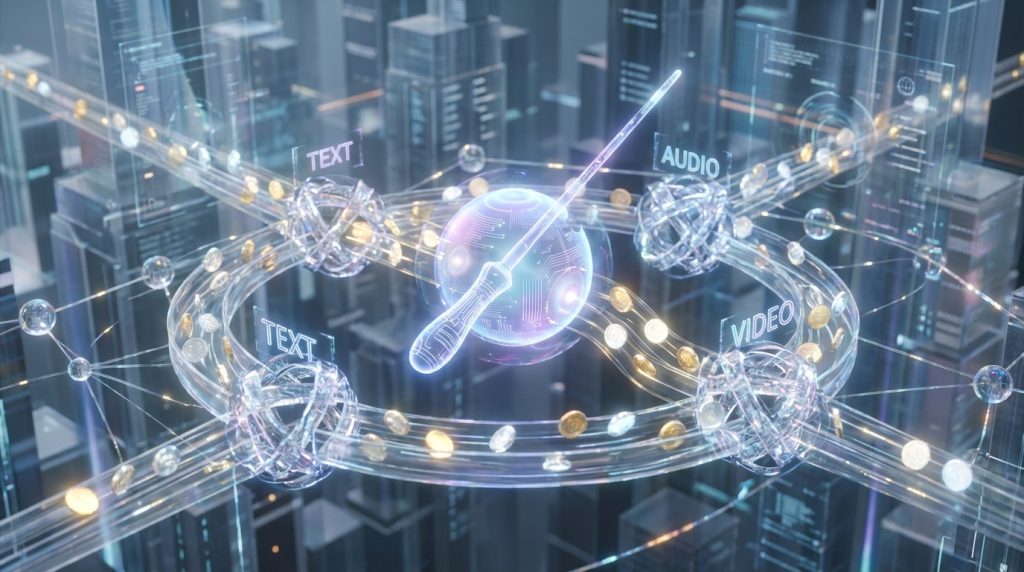

Token orchestration, therefore, is the art and science of managing these diverse token streams. It involves aligning, synchronizing, and blending tokens from text, images, and audio. The goal is to create a single, unified understanding within the model. Without proper orchestration, the model cannot make sense of the combined inputs.

The Conductor of AI Perception

Think of an ML architect as the conductor of an orchestra. The violins (text tokens), cellos (audio tokens), and percussion (image tokens) are all playing at once. Orchestration ensures they play in harmony. It prevents the AI from getting confused by conflicting or unsynchronized information. As a result, the final output is coherent and intelligent.

The Core Challenges Architects Face Today

Building effective multimodal systems is incredibly difficult. Architects currently grapple with several fundamental problems that slow down progress. These challenges demand innovative solutions and careful architectural planning.

The Alignment Problem

The most significant hurdle is semantic alignment. How do you ensure the model understands that the word “cat” in a text token and a picture of a cat in an image token refer to the same concept? This requires creating a shared representational space where different modalities can be directly compared. However, achieving this alignment is a complex task that lies at the heart of orchestration.

Computational Expense and Latency

Processing multiple data streams is computationally expensive. Video, in particular, generates a massive volume of tokens for every second of footage. Managing this data firehose requires immense processing power and memory. Moreover, keeping all streams synchronized in real-time to avoid latency is a major architectural headache. For example, a model generating video with audio must ensure the lip movements match the spoken words perfectly.

Emerging Strategies in Token Orchestration

Fortunately, researchers and engineers are developing powerful new techniques to address these challenges. These strategies are paving the way for more sophisticated and efficient multimodal models.

Cross-Modal Attention Mechanisms

One of the most successful approaches is cross-modal attention. This technique allows the model to selectively focus on different parts of each data stream. For instance, when processing a video of someone speaking, the model can learn to pay more attention to the mouth region of the video when audio tokens are present. This dynamic focus helps the model build strong connections between modalities.

Joint Embedding Spaces

Another key strategy is the use of joint embedding spaces. The goal here is to project tokens from all modalities into a single, shared vector space. In this space, similar concepts from different modalities are located close to each other. For example, a text description of a sunset and an image of a sunset would have very similar vector representations. This makes it much easier for the model to reason across different data types.

Token Merging and Efficiency

To combat computational costs, architects are exploring advanced token management techniques. These methods intelligently merge or prune redundant tokens without losing critical information. For example, in a long video, consecutive frames that are nearly identical can be represented by fewer tokens. This focus on maximizing token efficiency is crucial for making large-scale multimodal systems practical and affordable.

The Future: What’s on the Horizon?

The field of token orchestration is evolving rapidly. Looking ahead, several exciting developments promise to revolutionize how we build multimodal AI. These future trends will empower architects to create even more capable and integrated systems.

Unified Tokenizers

A major goal is the creation of a universal tokenizer. Currently, we use different tokenizers for text, images, and audio. A unified tokenizer, however, could process any data type and convert it into a common format. This would dramatically simplify model architecture and training pipelines, making multimodal AI development much more accessible.

Dynamic Orchestration Agents

Imagine an AI agent that acts as a master orchestrator. This agent could dynamically decide which modality to prioritize based on the task and context. For instance, if a user asks a question about a video, the agent might prioritize the audio and text transcript. If the question is about a visual detail, it would shift focus to the image tokens. This real-time, adaptive orchestration will lead to more flexible and context-aware models.

Generative World Models

Ultimately, the goal of token orchestration is to build generative world models. These are comprehensive AI systems that can simulate and interact with a consistent, multimodal reality. Such models could generate entire scenes with synchronized video, spatial audio, and descriptive text. Achieving this requires flawless orchestration and a deep understanding of how different modalities relate, including advanced techniques like semantic token mapping for lifelike voice generation.

Practical Implications for ML Architects

This evolving landscape has direct consequences for how ML architects design and build systems. Staying ahead of the curve requires a shift in mindset and a focus on new priorities.

As an architect, your role is shifting from building isolated models to designing integrated systems of perception. The future belongs to those who can make different AI senses work together as one.

Key Architectural Considerations

- Flexible and Modular Design: Architectures must be designed for flexibility. You should be able to easily add or remove modalities without rebuilding the entire system.

- Data Curation and Alignment: The quality of your model depends heavily on your data. Therefore, significant effort must be spent on curating high-quality, well-aligned multimodal datasets.

- Cost-Performance-Latency Balance: Every architectural decision involves a trade-off. You must constantly balance computational cost, model performance, and real-time latency to meet product requirements.

Frequently Asked Questions

What is the difference between multimodal fusion and orchestration?

Multimodal fusion typically refers to the specific point in an architecture where data streams are combined. Orchestration, on the other hand, is a broader concept. It encompasses the entire process of managing tokens, including pre-processing, alignment, synchronization, and fusion.

How does token orchestration impact LLM costs?

Poor orchestration leads to inefficiency and waste. By processing more tokens than necessary or failing to align them properly, you increase computational load. Consequently, this drives up training and inference costs. Good orchestration directly translates to lower operational expenses.

What skills are most important for an architect working on this?

A deep understanding of different data formats (video, audio codecs, etc.) is crucial. In addition, expertise in attention mechanisms, vector databases, and distributed computing is essential. Finally, strong systems design skills are needed to manage the complexity.

Is token orchestration relevant for smaller, specialized models?

Yes, absolutely. Even a simple model that processes images and text captions requires orchestration. The principles of alignment and efficient token management apply at any scale. In fact, good orchestration is even more critical for resource-constrained environments like edge devices.