Semantic Token Mapping for Lifelike Voice Generation

Published on Tháng 1 23, 2026 by Admin

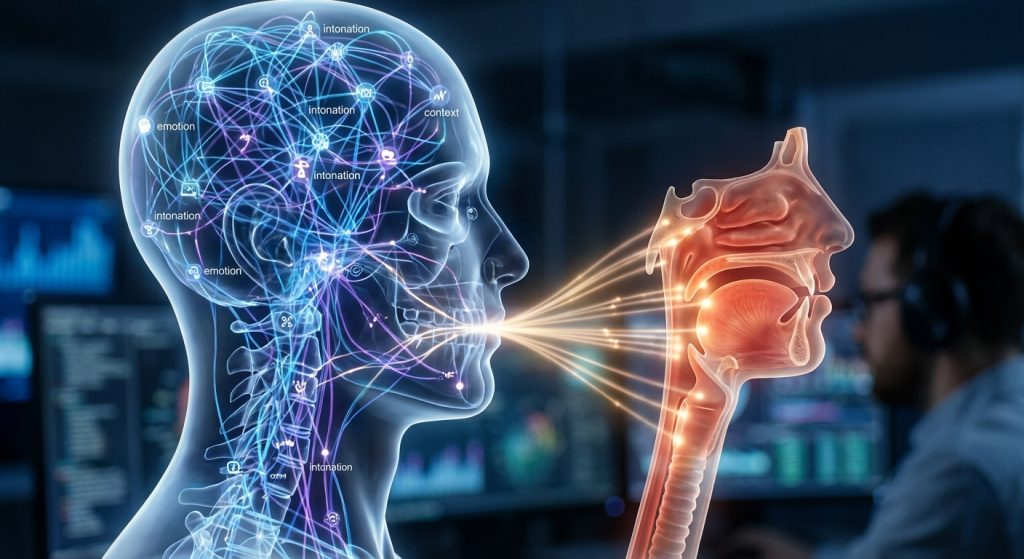

For decades, text-to-speech (TTS) systems have struggled with a key challenge: sounding truly human. While clarity has improved, the emotional range and natural prosody of speech have remained elusive. However, a new approach is changing the landscape. Semantic token mapping offers a path toward generating realistic, expressive, and context-aware voices. This technology moves beyond simple pronunciation to capture the very meaning behind the words.

This article explores this breakthrough for speech scientists. We will cover the limitations of traditional methods and then dive into how semantic tokens work. Moreover, we will discuss the mapping process that turns meaning into sound and its incredible potential for the future of voice generation.

What Are Traditional TTS Tokens?

Historically, most text-to-speech systems relied on basic units of sound. These units, or tokens, are typically phonemes or graphemes. A phoneme is the smallest unit of sound in a language, like the ‘k’ sound in “cat.” A grapheme is the written letter or group of letters that represents that sound.

These systems work by first converting input text into a sequence of these phonetic tokens. Then, a model generates audio that matches this phonetic sequence. While this method is effective for creating intelligible speech, it has significant drawbacks.

The Limits of Phoneme-Based Models

The primary limitation is a lack of context. Phoneme-based models know *how* to pronounce a word but don’t understand *what* the word means. As a result, the generated speech often sounds flat and robotic. It lacks the natural rhythm, intonation, and emotional color that humans use to convey meaning.

For example, the phrase “That’s great” can be said with genuine excitement, bitter sarcasm, or simple acknowledgment. A traditional TTS system would likely produce the same neutral version every time. This is because it has no information about the speaker’s intent or the surrounding context.

The Rise of Semantic Tokens: A New Paradigm

Semantic token mapping introduces a fundamentally different approach. Instead of focusing only on sounds, it starts with meaning. This method uses powerful large language models (LLMs) to analyze the input text first. The goal is to understand the context, sentiment, and underlying intent.

Based on this understanding, the LLM generates a sequence of “semantic tokens.” These tokens are not phonetic. Instead, they are discrete codes that represent high-level concepts like prosody, emotion, and speaker characteristics. In essence, they are a blueprint for *how* the text should be spoken.

How Semantic Tokens Work

The process involves a two-stage architecture. First, a language model reads the text and outputs semantic tokens. These tokens capture the nuance that phonemes miss. For instance, a specific token might encode a “questioning” intonation, while another might represent a “joyful” tone.

Next, a separate audio model, often called a vocoder, takes these semantic tokens as input. This model’s job is to translate the semantic blueprint into an actual audio waveform. Because the vocoder receives rich, meaningful instructions, it can produce speech that is far more natural and expressive.

Benefits Over Traditional Models

The advantages of this approach are immediately clear. By decoupling meaning from audio generation, models can achieve a new level of realism. The key benefits include:

- Improved Prosody: The rhythm, stress, and intonation of speech sound much more natural and human-like.

- Emotional Expressiveness: Models can generate speech that accurately reflects emotions like happiness, sadness, or anger.

- Contextual Awareness: The voice adapts based on the surrounding text, leading to more dynamic and engaging narration.

The Role of Semantic Token Mapping

Semantic token mapping is the critical bridge in this new architecture. It is the process of converting the high-level instructions from the language model into a representation that the audio model can interpret. This “map” ensures that the semantic intent is accurately translated into audible speech characteristics.

Think of it like a composer writing a musical score. The composer (the LLM) writes notes and instructions (semantic tokens) that convey the mood and tempo. The orchestra (the vocoder) then reads this score to perform the piece. The mapping is the shared language of musical notation that makes this translation possible.

Challenges in Semantic Mapping

Despite its promise, this technology is not without challenges. Firstly, creating a robust and consistent mapping is difficult. The model must learn to associate thousands of abstract semantic tokens with precise audio features. Any inconsistency can lead to unnatural-sounding results.

In addition, managing the sheer volume of tokens can be computationally expensive. Researchers are actively exploring ways to make the process more efficient. In fact, many are focused on how to slash AI audio lag with token compression, which is vital for real-time applications.

Modern models like Google’s SoundStorm and Meta’s Voicebox are pioneering these techniques, demonstrating remarkable capabilities in zero-shot voice cloning and expressive speech synthesis from just a short audio sample.

Practical Applications for Speech Scientists

The implications of semantic token mapping are vast. For speech scientists, this technology opens up new frontiers in research and application development. The ability to control vocal attributes with such precision is a game-changer.

For example, this allows for the creation of incredibly realistic virtual assistants that can convey empathy. It also enables dynamic character voices in video games that adapt to in-game events. Furthermore, audiobooks can be narrated with a level of emotional depth that was previously impossible for synthetic voices. The ability to control AI tokens for better response quality is at the heart of these advancements.

The Future of Voice Generation

We are moving toward a future where distinguishing between human and synthetic speech becomes increasingly difficult. Semantic token mapping is a massive step in that direction. Future work will likely focus on even greater control over voice characteristics, such as age, accent, and unique vocal tics.

Moreover, as models become more efficient, we can expect to see this technology integrated into a wider range of consumer devices. From personalized voice assistants to real-time voice translation with emotional preservation, the possibilities are truly exciting. This shift from phonetic reproduction to semantic representation marks a new era for speech synthesis.

Frequently Asked Questions

What is the main difference between a semantic token and a phoneme?

A phoneme is a basic unit of sound. It tells a model *what* sound to make. In contrast, a semantic token is a unit of meaning. It tells a model *how* to make a sound, including its emotion, rhythm, and intonation.

Is semantic token mapping computationally expensive?

Yes, it can be. The process involves a large language model to generate semantic tokens and then an audio model to synthesize the voice. However, researchers are constantly developing more efficient models and techniques like token compression to reduce the computational load and latency.

Can this technology clone any voice?

Some advanced models using semantic tokens, often called zero-shot TTS systems, can clone a voice from just a few seconds of audio. They capture the unique characteristics of the speaker’s voice and can then apply them to new text, even preserving the emotional tone.

How does this improve upon older TTS systems?

Older systems, which rely on phonemes, often sound monotonous and robotic because they lack context. Semantic token mapping allows the AI to understand the meaning and emotion behind the text. As a result, the generated speech has natural-sounding prosody and expressiveness, making it far more realistic and engaging.

“`