Predictive Cloud Scaling: An SRE’s Proactive Guide

Published on Tháng 1 6, 2026 by Admin

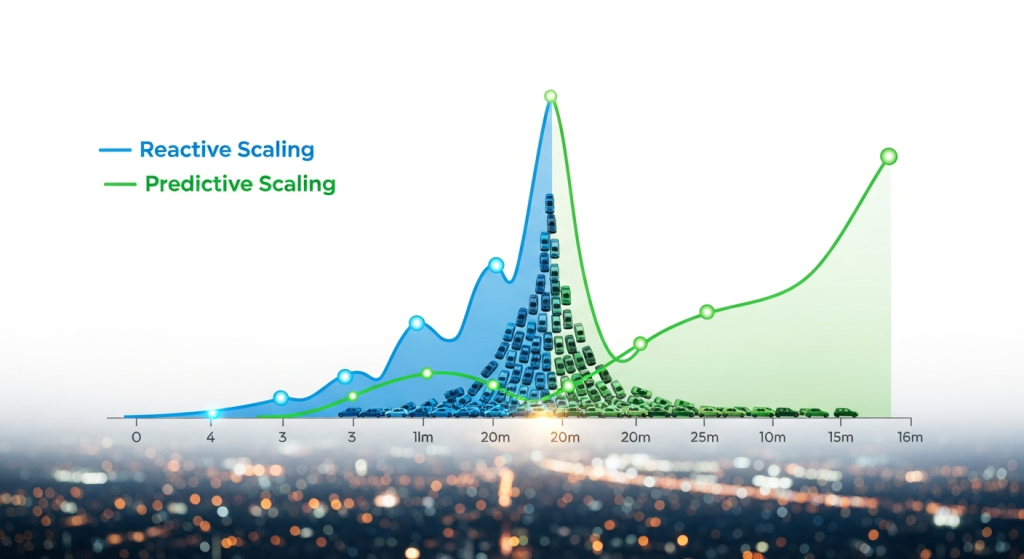

As a Site Reliability Engineer (SRE), your world revolves around stability, performance, and efficiency. You build systems that can withstand the unexpected. However, traditional autoscaling often feels like you’re always one step behind. You wait for a load spike, then react by adding capacity. This reactive approach, while necessary, has a fundamental flaw that can impact user experience.

Predictive cloud scaling flips this model on its head. Instead of reacting to traffic, it anticipates it. This guide explores how predictive scaling works, its benefits for SREs, and how major cloud providers like Google Cloud and AWS are implementing this machine-learning-powered technology to build more responsive and cost-effective systems.

The Inherent Lag of Reactive Autoscaling

For years, reactive autoscaling has been a cornerstone of cloud infrastructure. The concept is simple and powerful. When a metric like CPU utilization or request count crosses a threshold, the autoscaler automatically adds or removes virtual machine (VM) instances. This ensures you have enough capacity for peak loads while saving money during quiet periods.

However, a significant problem arises with applications that have long initialization times. Imagine your application takes several minutes to boot, initialize, and become ready to serve traffic.

If a sudden traffic surge occurs, like when users wake up in the morning, the reactive autoscaler kicks in. It starts creating new VMs, but while these instances are initializing, the existing servers are overwhelmed. Consequently, users might experience slow response times or even errors. You’re scaling, but not fast enough to prevent a negative impact.

What is Predictive Cloud Scaling?

Predictive cloud scaling is a proactive approach to infrastructure management. It uses machine learning (ML) models to analyze historical workload data and forecast future demand. Instead of waiting for a load increase, it scales out your infrastructure *in advance* of the predicted traffic.

This method is particularly effective for workloads with predictable patterns. For example, it can learn the daily and weekly cycles of your application’s traffic. It knows that traffic will ramp up at 9 AM on weekdays and slow down on weekends.

By forecasting these patterns, the autoscaler can provision new VMs ahead of time. This gives your application instances enough time to complete their initialization process. As a result, when the wave of user traffic arrives, your capacity is already in place and ready to serve, ensuring a smooth user experience.

How Does Predictive Scaling Work in Practice?

Predictive scaling isn’t magic; it’s a sophisticated system that combines historical data analysis with real-time adjustments. It works in concert with traditional scaling methods to provide a comprehensive solution.

The Forecasting Model

The core of predictive scaling is its ML model. This model consumes historical metric data, most commonly CPU utilization, from your instance group. It then identifies recurring patterns to build a forecast. For instance, AWS Auto Scaling uses these models to create a forecast for the next 48 hours, which is re-evaluated daily.

Similarly, Google Cloud’s model understands weekly and daily patterns for each managed instance group (MIG). It continuously adapts the forecast to match upcoming demand. In fact, on Google Cloud, the autoscaler checks the forecast several times per minute and adjusts capacity accordingly, providing a highly responsive system.

The Critical Role of Initialization Time

The most important configuration you provide is the application initialization period. This is known as the “cool down period” in Google Cloud or “warm-up time” in AWS. This value tells the predictive scaler how long your application takes to be ready to serve traffic after a VM boots.

For example, if you set this value to five minutes, the system will ensure new instances are created at least five minutes before the forecasted load increase. This simple setting is the key that unlocks the “ahead-of-time” scaling capability, directly addressing the lag inherent in reactive systems.

A Hybrid Approach: Proactive and Reactive Together

A common misconception is that predictive scaling replaces reactive scaling. In reality, they work together beautifully. Predictive scaling forecasts the load and schedules a *minimum* capacity. At the same time, dynamic or reactive scaling continues to monitor real-time metrics.

If an unpredicted spike occurs, the reactive scaler will add instances to handle it. Both Google Cloud and AWS state their systems will always use the higher number of VMs needed between the predictive forecast and the real-time demand. This hybrid model gives you the best of both worlds: proactive readiness for predictable patterns and reactive defense against unexpected surges. This dual strategy is a core component of achieving Google Cloud efficiency at scale.

Key Benefits for Site Reliability Engineers

For SREs, the move from reactive to proactive scaling offers tangible benefits that align directly with core reliability and efficiency goals.

Improved Application Responsiveness

The primary advantage is a dramatic improvement in user experience. By having capacity ready *before* the peak, you eliminate the lag and potential errors caused by application initialization delays. This means happier users and better service level objectives (SLOs). Google Cloud’s console even shows a metric for “Average VM minutes overloaded per day,” which predictive scaling aims to reduce.

Enhanced Cost Optimization

While it may seem counterintuitive, scaling ahead of time can actually lead to better cost efficiency. Without it, engineers often over-provision capacity around the clock just to be safe, leading to wasted spend during off-peak hours.

Predictive scaling allows you to run a leaner baseline and only scale up right before you need it. This “just-in-time” capacity provisioning helps reduce your overall VM costs. This approach is a key tenet of modern FinOps fundamentals, where operational actions are directly tied to financial outcomes.

Increased System Reliability

Ultimately, predictive scaling leads to a more stable and reliable system. Proactively matching capacity to demand reduces the strain on your application and infrastructure. This lessens the risk of cascading failures during high-traffic events and makes your service more resilient, which is the fundamental mission of any SRE team.

When is Predictive Scaling the Right Choice?

Predictive scaling is incredibly powerful, but it’s not a universal solution for every workload. It works best under specific conditions.

According to documentation from both Google and AWS, your workload is a great candidate if it meets the following criteria:

- Long Initialization Times: Your application takes a significant amount of time to become service-ready. A general rule of thumb is an initialization period of two minutes or more.

- Predictable Traffic Cycles: Your workload varies with recognizable daily or weekly patterns. This is common for e-commerce sites, news platforms, and B2B applications.

Conversely, it is less effective for workloads with highly erratic, unpredictable traffic spikes. For one-time events, such as a major product launch or a flash sale, schedule-based autoscaling is often a better tool to pre-provision capacity for a specific time window.

Implementing Predictive Scaling: Google Cloud vs. AWS

Both major cloud providers offer robust predictive scaling solutions, though their implementations have slight differences.

Google Cloud (Compute Engine)

On Google Cloud, predictive autoscaling is a feature of Managed Instance Groups (MIGs). To enable it, you simply edit your autoscaler configuration and change the mode from “Off” to “Optimize for availability.”

However, there are some limitations. Google’s predictive scaler currently works only with CPU utilization as the scaling metric. Furthermore, it requires 3 days of CPU-based autoscaling history before it can start generating reliable predictions.

Amazon Web Services (EC2)

AWS offers predictive scaling as a native part of EC2 Auto Scaling. The setup is guided by a wizard where you can select the resources to scale. A standout feature is the ability to enable it in “forecast only” mode. This allows you to see the predictions and scheduled actions without actually scaling any instances, which is perfect for evaluation.

Unlike Google’s current implementation, AWS allows you to forecast based on multiple metrics, including CPU utilization, network traffic, or even a custom CloudWatch metric.

Conclusion: The Future is Proactive

Predictive cloud scaling marks a significant evolution in infrastructure automation. For Site Reliability Engineers, it represents a shift from a defensive, reactive posture to an intelligent, proactive one. By leveraging machine learning to anticipate demand, you can build systems that are not only more reliable and performant but also more cost-efficient.

By understanding the principles behind it and how to apply it to the right workloads, you can stay ahead of traffic, eliminate performance bottlenecks, and ensure your services remain stable and responsive for your users.

Frequently Asked Questions

Is predictive autoscaling a free feature?

Yes, both Google Cloud and AWS offer the predictive scaling feature itself at no extra charge. However, you are still responsible for the costs of the Compute Engine or EC2 resources that are provisioned based on the scaling plan. The goal is to optimize this spending, not eliminate it.

How long does it take for predictive scaling to start working?

There is a learning period. Google Compute Engine requires at least three days of autoscaling history to generate its first predictions. AWS requires at least one day of historical data to begin forecasting.

What happens if a traffic spike is not predicted?

This is where the hybrid approach is crucial. Predictive scaling works alongside traditional reactive (or dynamic) scaling. If an unpredicted spike occurs, the reactive scaler will detect the real-time load increase and add capacity. The system ensures reliability by always using whichever scaling policy demands more instances.

Does predictive scaling only work based on CPU utilization?

It depends on the provider. Currently, Google Cloud’s predictive autoscaling for MIGs works only with CPU utilization as the scaling metric. In contrast, AWS allows you to forecast based on CPU, network I/O, application load balancer request counts, or a custom metric.