AI’s New Vision: Sub-Pixel Detail in Medical Images

Published on Tháng 1 25, 2026 by Admin

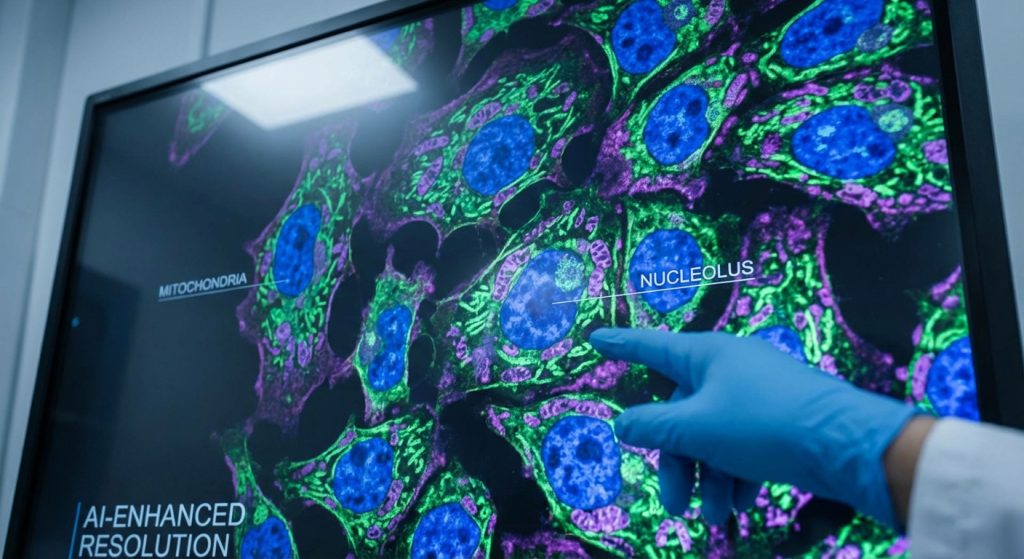

In medical imaging, every detail matters. For researchers and clinicians, the ability to discern the faintest anomaly can change a patient’s outcome. However, traditional digital images are limited by their pixel grid. This can obscure critical information. Consequently, a new technique is emerging to overcome this barrier: sub-pixel token refinement. This approach allows AI models to see beyond the pixel, unlocking a new level of detail and diagnostic potential.

This article explores the world of sub-pixel token refinement. Firstly, we will explain the fundamental problem with pixel-based analysis. Then, we will dive into how this innovative method works. Moreover, we will cover its significant benefits and real-world applications in fields like oncology and neurology. Finally, we will address the challenges and future directions for this transformative technology.

The Pixel Problem in Medical Diagnostics

Digital images are made of pixels, which are tiny squares of color. For a long time, increasing the number of pixels was the primary way to improve image quality. However, in medical imaging, there are physical and practical limits to resolution. For example, MRI or CT scanners have constraints on how fine a detail they can capture.

This creates a significant challenge. Early-stage diseases, such as micro-metastases or subtle neural degeneration, may be smaller than a single pixel. Therefore, they can be easily missed or misinterpreted by both human eyes and standard AI algorithms. The rigid grid of pixels forces a loss of information, averaging out the details within each square.

Imagine trying to read a tiny-print book where each letter is blurry. You might guess the words, but you can’t be certain. This is the problem researchers face with standard pixel resolution. Sub-pixel refinement, in essence, sharpens those letters.

What is Sub-Pixel Token Refinement?

Sub-pixel token refinement is a computational technique that allows AI to represent and analyze information at a resolution finer than the image’s native pixel grid. Instead of treating a pixel as the smallest unit of information, the model learns to understand the data that exists *between* the pixels. It achieves this by intelligently interpolating and placing information “tokens” in a continuous space.

Essentially, the AI model is no longer bound by the digital grid. It can define the edge of a cell or a lesion with much greater precision. This results in a representation of the image that is far more detailed than the original source data would suggest.

Going Beyond the Grid

Traditional AI models for image analysis, like Convolutional Neural Networks (CNNs), operate directly on the pixel grid. They analyze patterns by looking at groups of pixels. While effective, their precision is ultimately capped by the image’s resolution. Sub-pixel methods, on the other hand, often use transformer-based architectures.

These models can learn relationships between different parts of an image more fluidly. A “token” can represent a conceptual piece of the image, and the model learns to place these tokens with sub-pixel accuracy. As a result, it builds a more faithful internal model of the underlying anatomy or pathology.

How the Refinement Process Works

The process begins with a standard digital medical image. The AI model first performs a coarse analysis to identify regions of interest. Then, within these regions, it applies the refinement technique. The model predicts where the precise boundaries and features should lie, even if they fall between the centers of pixels.

This is not simply image upscaling. While related, upscaling often invents new pixel data based on surrounding pixels. Sub-pixel refinement, in contrast, uses the model’s deep understanding of anatomical structures to more accurately place the features that are already implicitly present in the data. This allows for precision token windows for high-quality upscaling and analysis, leading to a more truthful representation.

Key Benefits for Medical Imaging Researchers

The adoption of sub-pixel token refinement offers several transformative advantages for medical research. These benefits directly address some of the most persistent challenges in computational medicine, leading to better tools and improved patient care.

Unprecedented Image Clarity

The most immediate benefit is a dramatic improvement in effective image resolution. Researchers can visualize fine structures that were previously blurred or invisible. For instance, this could mean clearly delineating the invasive front of a tumor or observing the subtle thinning of a retinal nerve fiber layer. This clarity is crucial for understanding disease mechanisms at a micro-level.

Improved Diagnostic Accuracy

Greater clarity naturally leads to higher accuracy. An AI model equipped with sub-pixel refinement can make more confident and correct classifications. It can reduce false negatives, where a disease is missed, and false positives, where a benign anomaly is flagged as malignant. This boosts the reliability of automated diagnostic systems, making them more trustworthy as clinical aids.

Enhanced Quantitative Analysis

Medical research often relies on precise measurements. For example, tracking the change in a tumor’s volume over time is critical for assessing treatment response. Standard pixel-based measurements can be imprecise, a phenomenon known as partial volume effects. Because sub-pixel refinement defines boundaries more accurately, it allows for more precise and repeatable quantitative analysis of medical images.

Applications Across Medical Specialties

The potential applications of sub-pixel token refinement span numerous fields of medicine. Anywhere that fine detail is paramount, this technology can offer a significant leap forward.

Oncology and Pathology

In digital pathology, AI analyzes high-resolution scans of tissue slides. Sub-pixel refinement can help pathologists identify individual cancerous cells with greater accuracy. Furthermore, it can precisely map the tumor microenvironment, which is vital for predicting disease progression and selecting personalized therapies.

Neurology and Brain Imaging

For neurologists studying diseases like Alzheimer’s or Multiple Sclerosis, subtle changes in brain structure are key biomarkers. Sub-pixel techniques can enhance MRI scans, allowing for earlier and more accurate detection of cortical thinning or the formation of small lesions. This could revolutionize early diagnosis.

Ophthalmology

Retinal scans are used to diagnose conditions like glaucoma and diabetic retinopathy. These diseases cause minute changes to blood vessels and nerve layers in the eye. With sub-pixel detail, AI systems can detect these changes much earlier than traditional methods, enabling timely intervention to prevent vision loss.

Challenges and Future Directions

Despite its immense promise, sub-pixel token refinement is not without its hurdles. Overcoming these challenges will be key to its widespread adoption in clinical and research settings.

The Computational Cost

Operating at a sub-pixel level is computationally intensive. It requires significant GPU power and memory, which can be a barrier for some research labs and hospitals. Therefore, ongoing research focuses on creating more efficient models that can deliver these benefits without prohibitive hardware requirements.

Model Complexity and Training

Developing and training these sophisticated AI models is complex. It requires vast, high-quality datasets and specialized expertise in machine learning. In addition, validating the model’s accuracy is a rigorous process. Researchers must carefully balance the impact of token count on generative media quality and accuracy with computational overhead to build practical tools.

The Future is Hyper-Detailed

Looking ahead, sub-pixel token refinement is set to become a cornerstone of next-generation medical imaging AI. As models become more efficient and hardware more powerful, this technology will move from the research lab into routine clinical workflows. It promises a future where diagnoses are faster, more accurate, and more personalized, all by teaching machines to see the details hidden between the pixels.

Frequently Asked Questions

Is sub-pixel refinement just a fancy term for image upscaling?

Not exactly. While both aim to increase detail, upscaling typically generates new pixel values to create a larger image, which can sometimes introduce artifacts. Sub-pixel refinement, however, uses an AI model’s understanding of the subject to more accurately place features in a continuous space, providing a more faithful representation of the underlying data.

What kind of hardware is needed to run these models?

Currently, training and running models with sub-pixel refinement requires high-end GPUs with substantial VRAM. The exact requirements depend on the model’s complexity and the image size. However, research is ongoing to optimize these models for more accessible hardware.

How does this technology affect the diagnostic workflow?

In the future, this technology could act as a powerful assistant for radiologists and pathologists. An AI could pre-process images to highlight areas of concern with enhanced, sub-pixel detail. This would allow human experts to focus their attention more effectively and make diagnoses with greater confidence and speed.

Is this technology approved for clinical use?

Sub-pixel token refinement is primarily a research-stage technology at present. While some components may be part of experimental tools, it is not yet a standard feature in FDA-approved diagnostic software. Rigorous clinical validation is required before it can be widely deployed for patient diagnosis.