A Guide to Reducing GPU Memory via Token Quantization

Published on Tháng 1 24, 2026 by Admin

Why GPU Memory is a Critical Bottleneck

Large Language Models (LLMs) have grown exponentially. Their complexity requires vast computational resources. Specifically, the GPU’s video random-access memory (VRAM) is often the limiting factor. Every parameter and calculation needs space in this finite memory pool.When VRAM runs out, performance suffers dramatically. The system may resort to slower system RAM or fail entirely. This bottleneck restricts the size of models you can deploy. In addition, it limits the number of users you can serve simultaneously. Therefore, optimizing memory usage is not just a goal; it is a necessity.

The High Cost of VRAM

High-capacity GPUs are expensive. Equipping servers with enough VRAM to handle state-of-the-art models leads to soaring hardware costs. Consequently, any technique that reduces memory consumption directly translates to significant financial savings. It allows you to achieve more with your existing hardware.

Understanding Tokens and Their Memory Footprint

To understand the solution, we must first understand the problem’s source. AI models do not process raw text or data. Instead, they convert input into numerical representations called tokens. A token can be a word, part of a word, or even a single character.These tokens are not simple integers. They are typically stored as high-precision floating-point numbers, like FP32 or FP16. While this precision ensures accuracy, it consumes a large amount of memory. Each number takes up 32 or 16 bits. When a model has billions of parameters, the memory load quickly adds up.

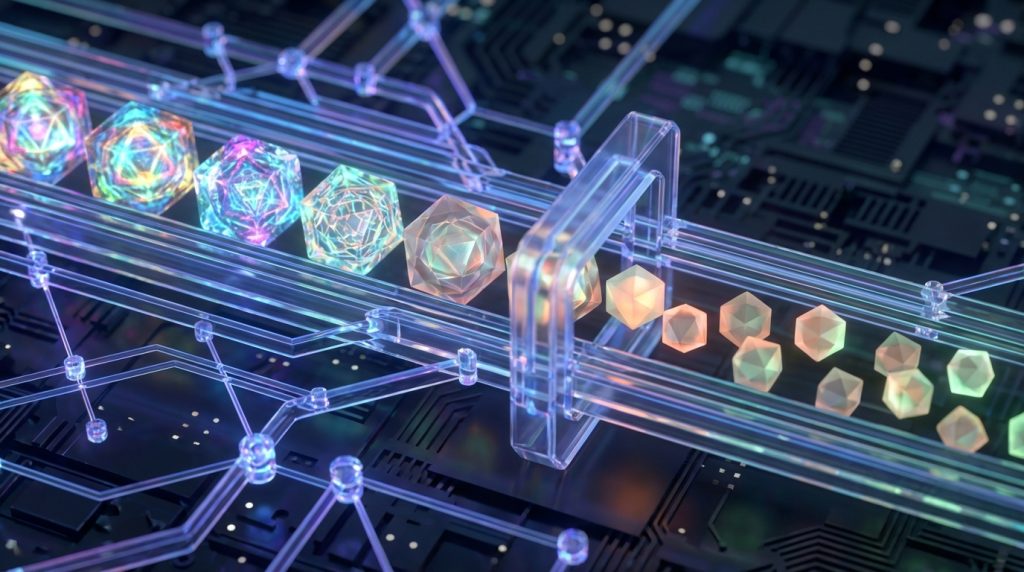

Introducing Token Quantization: The Core Concept

Token quantization is a process that reduces the numerical precision of tokens. Think of it like using a simpler ruler. Instead of measuring in millimeters (high precision), you measure only in whole centimeters (lower precision). You lose some detail, but the measurement is much simpler and faster to write down.Similarly, quantization converts high-precision numbers into lower-precision ones. For example, it can change a 32-bit floating-point number into an 8-bit integer (INT8). This simple change has a massive impact. The memory required to store that number drops by 75%.

The Direct Benefits of Quantization

Applying this technique provides several key advantages for hardware optimization. The benefits go beyond just saving memory.

- Smaller Model Size: Quantizing the model’s weights makes the model file itself much smaller. This reduces storage costs and shortens loading times.

- Reduced Memory Usage: Both the model and its intermediate calculations (like the KV cache) consume less VRAM. This allows you to run larger models or increase batch sizes.

- Faster Inference Speed: Many GPUs can perform integer math much faster than floating-point math. As a result, quantization can lead to lower latency and higher throughput.

Key Quantization Techniques to Know

There are two primary methods for applying quantization. Each has its own trade-offs between ease of implementation and final model accuracy.First, Post-Training Quantization (PTQ) is the simpler approach. You take a fully trained, high-precision model and convert its weights to a lower precision. This method is fast and does not require access to the original training data. However, it can sometimes lead to a noticeable drop in accuracy.On the other hand, Quantization-Aware Training (QAT) is a more involved process. It simulates the effects of quantization during the model’s training phase. The model learns to be robust to the precision loss from the start. Therefore, QAT usually results in better accuracy than PTQ, but it requires more time and computational resources. Exploring various AI writing strategies for lower token consumption can also complement these hardware-level optimizations.

How to Implement Token Quantization

Getting started with quantization is more accessible than ever. Many modern AI frameworks and libraries have built-in tools to assist you. The general process involves a few logical steps.

Step 1: Profile Your Model

Before you optimize, you need a baseline. First, measure your model’s current memory usage and performance. Identify the key bottlenecks. Is the VRAM being exhausted by the model’s weights or by the KV cache during active use? This data will help you gauge the success of your efforts.

Step 2: Choose Your Tools

You do not need to build a quantization system from scratch. Libraries like Hugging Face Transformers, bitsandbytes, and NVIDIA’s TensorRT-LLM offer powerful, easy-to-use quantization functions. For instance, loading a model in 8-bit or 4-bit mode can often be done with a single line of code. These principles are not just for language; you can also use quantized models for faster, cheaper photo generation.

Step 3: Apply and Test

Apply the quantization technique to your model. Then, run the same profiling tests you performed in the first step. Compare the new memory usage and inference speed to your baseline. Crucially, you must also evaluate the model’s output accuracy to ensure the performance trade-off is acceptable for your application.

Potential Challenges and Solutions

The most significant challenge with quantization is the potential loss of model accuracy. Reducing precision can sometimes degrade the model’s ability to make nuanced predictions.However, you can mitigate this issue. Using a small, representative dataset to calibrate the quantization process can significantly improve results. This helps the algorithm find the optimal way to map high-precision values to a lower-precision range. Furthermore, using mixed-precision techniques, where only certain parts of the model are quantized, offers a great balance between efficiency and performance.

Frequently Asked Questions (FAQ)

What’s the difference between weight and activation quantization?

Weight quantization reduces the precision of the model’s stored parameters. This shrinks the model’s size on disk and in memory. Activation quantization, on the other hand, reduces the precision of intermediate values calculated during inference. This is crucial for reducing the memory footprint of the KV cache.

How much memory can I really save?

The savings depend on the target precision. For example, moving from FP16 (16 bits) to INT8 (8 bits) cuts memory usage for those values by 50%. Moving to INT4 (4 bits) cuts it by 75%. These savings are substantial, especially for multi-billion parameter models.

Will quantization work on any GPU?

Most modern GPUs support lower-precision integer operations. However, the performance gains are most significant on newer architectures specifically designed to accelerate them, such as those with NVIDIA’s Tensor Cores. Always check your hardware’s documentation for optimal performance.

Conclusion: A Smarter Path to Hardware Efficiency

In conclusion, GPU memory constraints are a major hurdle in deploying large-scale AI. Token quantization provides a direct and effective strategy to overcome this challenge. By reducing the numerical precision of tokens, engineers can drastically lower VRAM consumption.This technique shrinks model sizes, accelerates inference, and ultimately reduces hardware costs. While it involves a trade-off with model accuracy, modern tools and methods make it easier than ever to implement quantization with minimal performance loss. For any hardware optimization engineer working with AI, mastering token quantization is an essential skill for building efficient and scalable systems.