Mastering Latent Space for Efficient Media Creation

Published on Tháng 1 24, 2026 by Admin

What Exactly Is Latent Space?

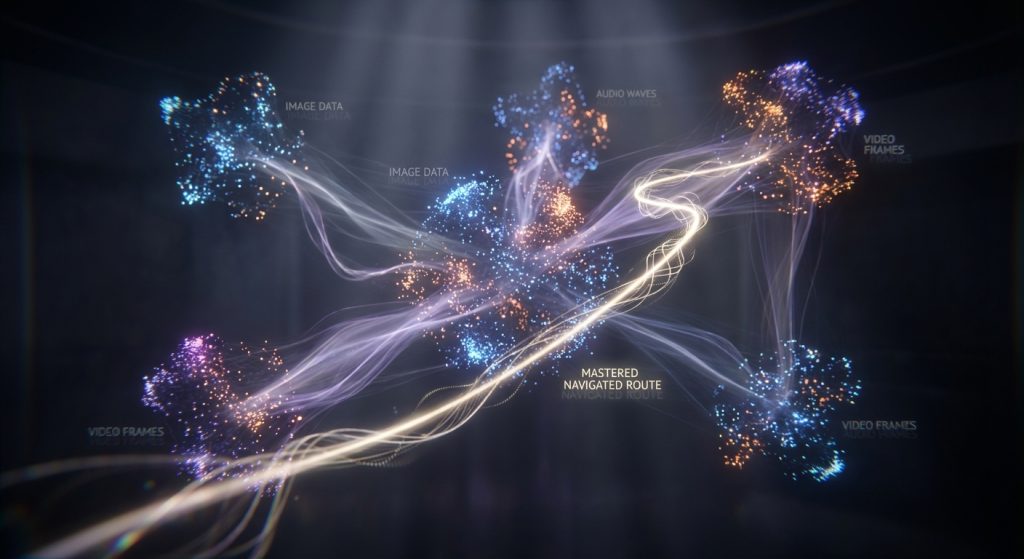

Latent space can seem abstract, but it’s a powerful idea. In essence, it is a compressed, meaningful representation of data. Generative models like VAEs and GANs learn this space from the vast datasets they are trained on. Each point in this space corresponds to a unique media asset.

A Simple Analogy: The Data Library Map

Imagine a massive library with millions of photos. Finding a specific photo or a group of similar photos would be impossible without a catalog. The latent space acts like a highly organized map of this library. Similarly, an autoencoder model creates this map.The process works in two steps:

- The Encoder: This part of the model takes a high-resolution image and compresses it into a small set of numbers. This compressed point is its location on the latent space map.

- The Decoder: This part takes a point from the map and reconstructs it back into a full-resolution image.

As a result, points that are close together on this map represent visually similar images.

Why It Matters for Media Assets

This concept is not limited to images. For example, it applies to audio, video, and even 3D models. The latent space organizes these complex assets based on their essential features, not just surface-level details. Therefore, it allows for powerful manipulation and generation. You can move from a point representing a “sad song” to one representing a “happy song” and generate all the variations in between.

Core Techniques for Navigating Latent Space

Once you have this map, you can start exploring it. Navigation is about moving from point to point to create new or modified media assets. Several core techniques enable this exploration.

Interpolation: The Straight Path

The simplest navigation method is interpolation. This involves picking two points in the latent space and drawing a straight line between them. For instance, you could select the latent point for a photo of a cat and another for a dog.By sampling points along the line connecting them, you can generate a smooth transition. The output would be a video that seamlessly morphs a cat into a dog. This shows how the model has organized “cat-ness” and “dog-ness” in its internal understanding.

Vector Arithmetic: Manipulating Concepts

A more powerful technique is vector arithmetic. This method treats features as directions within the latent space. As a result, you can add or subtract these feature vectors to manipulate the output in a controlled way.The most famous example is with words: “King” – “Man” + “Woman” results in a vector very close to “Queen”. This same logic applies to media. For example, you could find a “smile” vector by subtracting a neutral face from a smiling face. Adding this vector to any other face in the latent space will make it smile.

Traversal and Sampling: Exploring New Ideas

Finally, you can simply “walk” through the latent space. By moving along a specific axis or dimension, you can observe how it affects the generated media. This is called traversal. For example, one dimension might control background color, while another controls the age of a person in a portrait.This exploration is fundamental for discovering the capabilities of a generative model. It helps you understand what the model has learned and how it has organized different concepts.

Challenges in Efficient Navigation

While powerful, navigating latent space is not without its difficulties. Data scientists must overcome several key challenges to use these techniques effectively in real-world applications.

The Problem of Disentanglement

An ideal latent space is “disentangled.” In such a space, each dimension corresponds to one single, interpretable feature. For example, one axis would control only hair color and nothing else. However, most models produce entangled spaces.In an entangled space, changing one attribute might unintentionally alter others. For instance, trying to add a smile might also change the person’s gender or the lighting in the image. This makes precise control very difficult. Therefore, a major area of research is developing models that learn more disentangled representations.

High-Dimensionality and “Holes”

Latent spaces are often vast and high-dimensional. Not every point in this space will decode into a high-quality or coherent media asset. Some regions, often called “holes,” may produce noisy or nonsensical outputs.Navigating around these holes requires careful sampling strategies. It is a significant challenge because it is hard to know where these invalid zones are without extensive testing. This adds complexity to any application that relies on random exploration of the space.

Computational Cost

Decoding a point from the latent space back into a high-resolution media asset is computationally expensive. Continuously sampling points to find a desired output can quickly become a bottleneck. As a result, efficiency is a primary concern. Techniques like using cost-effective video generation via sparse tokens aim to reduce this burden by focusing computation on the most important parts of the asset.

Practical Applications for Data Scientists

Despite the challenges, efficient latent space navigation enables many powerful applications that are transforming industries. These tools provide new ways to interact with and generate digital content.

Generative Art and Content Creation

The most obvious application is in creative fields. Artists and designers can explore latent spaces to discover novel aesthetics and generate unique images, music, and animations. This turns the AI model into a collaborative partner, a tool for inspiration rather than just a simple image generator.

Semantic Search and Asset Retrieval

Traditional search relies on keywords and metadata. On the other hand, latent space enables semantic search. Instead of searching for “dog,” you can find images based on abstract concepts like “a lonely dog in the rain.” This is because the model understands the relationships between concepts. This approach can dramatically cut costs with semantic image search by making massive asset libraries more accessible and reusable.

Data Augmentation

High-quality training data is often scarce. Latent space navigation offers a solution through data augmentation. By taking an existing data point and making small, meaningful variations in the latent space (like slightly changing the viewing angle), you can generate a wealth of new, realistic training examples. This can significantly improve the performance and robustness of other machine learning models.

Conclusion: The Future of Latent Space

In conclusion, latent space is more than just a technical byproduct of generative models. It is a structured, navigable map of data that offers immense creative and analytical power. By mastering techniques like interpolation and vector arithmetic, data scientists can unlock new efficiencies in media creation, search, and data augmentation.However, challenges like entanglement and computational cost remain. Future research will likely focus on creating more interpretable models and faster decoding methods. As these tools evolve, navigating latent space will become an even more essential skill for anyone working at the cutting edge of AI and data science.

Frequently Asked Questions (FAQs)

What’s the difference between latent space and feature space?

A feature space is typically a direct representation of hand-crafted or extracted features from the data (e.g., color histograms). In contrast, a latent space is a lower-dimensional representation learned automatically by a model, capturing deeper, more abstract relationships in the data.

Can I navigate the latent space of any machine learning model?

No, this concept is specific to generative models like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and diffusion models. These models are explicitly designed to learn a compressed latent representation from which new data can be generated.

How do GANs use latent space?

In a GAN, the Generator network takes a random point from a simple latent space (usually a random noise vector) and transforms it into a complex data sample, like an image. The training process forces the Generator to map this latent space in a way that produces realistic outputs.

What tools are used for latent space visualization?

Commonly used tools include dimensionality reduction techniques like t-SNE and UMAP, often plotted using libraries like Matplotlib or Plotly in Python. These tools help project the high-dimensional latent space into 2D or 3D for human inspection.