Boost FPS: A Guide to Temporal Token Slicing

Published on Tháng 1 23, 2026 by Admin

For streaming platform developers, the mission is clear. You need to deliver the highest quality video with the smoothest playback. However, this goal often clashes with a major obstacle: bandwidth limitations. As a result, achieving high frame rates like 60 or 120 FPS can be a significant challenge.

This article introduces a transformative new technology called Temporal Token Slicing (TTS). Consequently, it offers a path to higher frame rates without demanding more bandwidth. We will explore what it is, how it works, and why it matters for your platform.

The Challenge: High Frame Rates vs. Bandwidth Limits

Users today expect buttery-smooth video. This is especially true for fast-paced content like live sports and video game streaming. A higher frame rate directly translates to a better Quality of Experience (QoE). However, every frame is data, and more frames per second mean a much larger data stream.

Traditionally, developers face a difficult trade-off. You can either increase the bitrate to support higher frame rates, which risks buffering for users on slower connections, or you can cap the frame rate to ensure stability. Neither option is ideal. Existing codecs like H.265 and AV1 are incredibly efficient, but they still operate on this fundamental principle.

For example, doubling the frame rate from 30 FPS to 60 FPS can nearly double the amount of data required, putting immense pressure on your infrastructure and your users’ networks.

What is Temporal Token Slicing?

Temporal Token Slicing is an innovative, AI-driven approach to video compression. Instead of processing and sending every single pixel in every frame, TTS intelligently analyzes video over time. It identifies what is new, what has changed, and what is redundant.

Think of it like this. Imagine you are sending a text document to a friend every second. A traditional method would send the entire document each time. In contrast, a smarter method would only send the words that have changed since the last version. Temporal Token Slicing applies this logic to video frames.

How Does Temporal Token Slicing Work?

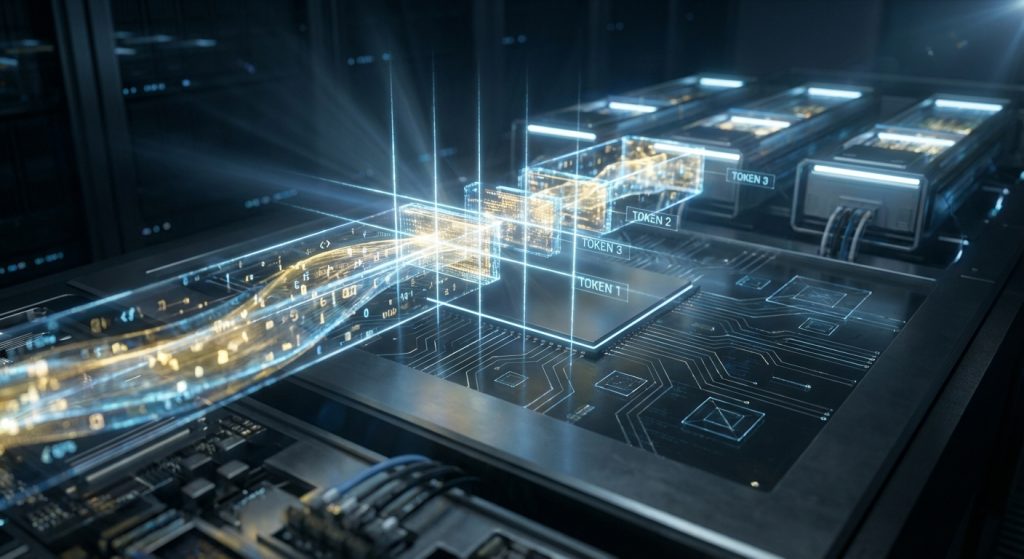

The process breaks down video into meaningful chunks, not just pixels. These chunks are called “tokens.” A token could represent a small patch of texture, an object’s edge, or even a complex motion pattern. The system then works in a few simple steps.

- Tokenization: First, the video encoder converts the incoming video into a sequence of these semantic tokens.

- Temporal Analysis: Next, an AI model examines tokens across several frames. It learns the patterns, such as a static background or a predictable movement.

- Predictive Slicing: Based on past frames, the model predicts the next frame. Then, it “slices” away all the tokens from the actual frame that match its prediction.

- Delta Transmission: As a result, only the difference—the new or unpredictable tokens—are packaged and sent over the network. This “delta” is much smaller than the full frame.

- Reconstruction: Finally, the client’s device uses the same AI model to take the delta tokens and reconstruct the full, high-fidelity frame.

This entire process happens in real-time. Therefore, it allows for a massive reduction in the data required for each second of video.

The Core Benefits for Streaming Platforms

Adopting Temporal Token Slicing offers several powerful advantages for developers and businesses. The benefits go beyond just smoother video and touch on costs and scalability.

Drastically Reduced Bandwidth

The most immediate benefit is a significant reduction in bandwidth consumption. Because TTS sends only essential information, the data payload for each frame plummets. This frees up network capacity, making 60 FPS or even 120 FPS streams viable for a much wider audience. In addition, it helps users with data caps.

Improved User Experience (QoE)

Lower data requirements directly lead to a better user experience. Viewers will encounter less buffering and fewer frustrating quality drops. Moreover, the motion in sports, gaming, and action scenes appears far more fluid and lifelike, increasing engagement and satisfaction.

Lower CDN and Egress Costs

For any streaming service, content delivery network (CDN) and data egress costs are a major operational expense. By reducing the amount of data you transmit, TTS can lead to substantial cost savings. As your platform scales, these savings become even more significant, directly improving your bottom line.

Adaptive Bitrate on a New Level

Temporal Token Slicing enhances adaptive bitrate (ABR) streaming. The “slicing” can be adjusted dynamically based on real-time network conditions. For instance, if a user’s connection weakens, the AI can choose to slice more aggressively, preserving frame rate at a slight quality cost. This provides more granular control than traditional ABR ladders.

Implementing Temporal Token Slicing: A Practical Overview

Integrating TTS into a streaming platform requires a new set of tools. The core components are the AI models for encoding and decoding. These are typically provided through specialized SDKs that can be integrated into your existing video pipeline.

It is important to acknowledge the computational overhead. This technique trades bandwidth for processing power. The AI models need CPU or GPU resources on both the server and client side. However, modern models are highly optimized. They are designed to run efficiently on a wide range of devices, from high-end PCs to mobile phones with dedicated AI hardware.

The key to a successful implementation is efficiency. Researchers are constantly working on ways to make these models smaller and faster without sacrificing quality. Exploring concepts like Neural Video Synthesis: Maximizing Token Efficiency is crucial for developers looking to stay on the cutting edge of this technology.

Ultimately, TTS represents a paradigm shift. It moves away from brute-force data transmission and towards an intelligent, context-aware streaming model. For platforms willing to embrace this future, the rewards are immense.

Frequently Asked Questions

Is Temporal Token Slicing a replacement for codecs like AV1?

No, it is not a direct replacement. Instead, you should view it as a complementary technology. TTS can work on top of or alongside traditional codecs. For example, the “delta tokens” themselves can be compressed using a standard codec, creating an even more efficient stream.

What is the impact of this technology on latency?

The impact on latency is minimal and can even be negative (meaning, it can reduce latency). While the AI models add a tiny amount of processing time, the massive reduction in data size means less time is spent transmitting packets over the network. As a result, the end-to-end latency is often lower than with traditional methods, which is critical for live streaming.

How much processing power is needed on the client device?

This can vary. High-fidelity reconstruction requires more power, making modern devices with dedicated AI chips ideal. However, scalable models exist that can run on older or less powerful hardware by performing a less complex reconstruction. The system can adapt based on the client’s detected capabilities.

Is this technology difficult to implement?

While the underlying AI is complex, implementation is becoming easier. Most providers of this technology offer robust SDKs and APIs that handle the heavy lifting. A developer’s main task is to integrate this SDK into their existing player and encoder infrastructure, which is a familiar process for most streaming teams.

“`