Edge Computing Efficiency: An Architect’s Guide

Published on Tháng 1 13, 2026 by Admin

What is Edge Computing Efficiency?

Edge computing efficiency is about getting the most out of your distributed resources. It means balancing performance with constraints. These constraints often include power, cost, and physical space. Therefore, an efficient edge system processes data quickly and reliably without waste.

Defining the Core Concept

At its heart, efficiency is a measure of output versus input. In edge computing, the output is processed data or a completed task. The inputs, on the other hand, are resources like CPU cycles, memory, bandwidth, and electricity. An efficient system maximizes the output for every unit of input. This is critical in environments where resources are limited.

Why It’s Critical for IoT Architects

For an IoT architect, efficiency is not just a technical goal. It is a business imperative. Inefficient systems lead to higher operational costs. They can also cause poor user experiences and limit scalability. For instance, a battery-powered sensor that drains too quickly is a failed design. Similarly, an edge gateway that constantly requires expensive cloud data transfers defeats its purpose. Therefore, architects must design for efficiency from day one.

Key Pillars of Edge Efficiency

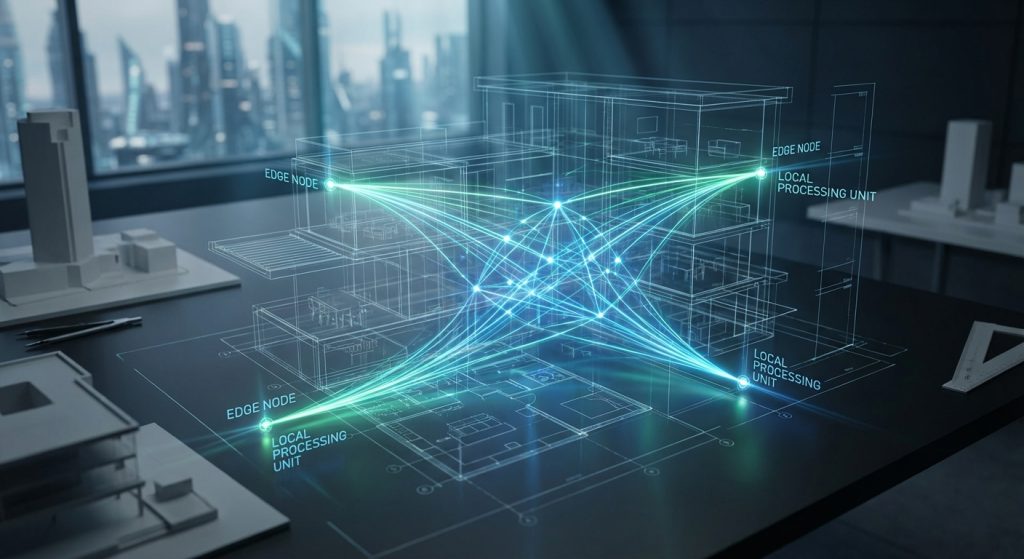

Achieving efficiency requires a holistic approach. You must consider three main pillars: hardware, software, and networking. Each area offers unique opportunities for optimization. A weakness in one pillar can undermine the entire system.

Hardware and Resource Optimization

The foundation of your edge deployment is its hardware. Choosing the right components is essential. You must consider the specific workload of your application. For example, AI and machine learning tasks may require specialized processors like GPUs or TPUs.In addition, power consumption is a major concern. Many edge devices operate on batteries or limited power sources. Therefore, selecting low-power components is vital. This extends beyond the CPU to include memory, storage, and connectivity modules. Proper hardware selection directly impacts both performance and operational longevity.

Software and Application Design

Efficient software is just as important as optimized hardware. Your code should be lightweight and purpose-built. Avoid unnecessary libraries or processes that consume valuable resources. This is where lean software development principles become very useful.Furthermore, consider the application’s architecture. Can tasks be broken down into smaller microservices? This approach can lead to better resource utilization. It also allows for more granular control and updates. Using efficient programming languages and algorithms can also make a significant difference in performance and power draw.

Network and Data Management

Edge computing’s primary benefit is reducing network latency and bandwidth usage. However, this is only true if you manage data intelligently. You must decide what data to process at the edge. You also need to determine what data to send to the cloud.This data filtering and aggregation is a core task of an efficient edge system. It prevents overwhelming your network or incurring high data transfer costs. In addition, you should use efficient communication protocols. Protocols like MQTT are designed for constrained IoT environments and are often a better choice than HTTP for telemetry data.

Strategies for Maximizing Edge Efficiency

Now that we understand the pillars, let’s explore actionable strategies. These techniques can help you build and maintain a highly efficient edge infrastructure. The goal is continuous improvement.

Right-Sizing Your Edge Infrastructure

One of the biggest sources of waste is oversized infrastructure. Many architects provision edge nodes with more capacity than needed. This leads to unnecessary hardware costs and higher energy consumption. As a result, you should perform a thorough workload analysis.Understand the resource requirements of your applications. Then, choose hardware that meets those needs with a reasonable buffer. Don’t plan for the absolute worst-case scenario if it’s highly unlikely. Instead, design a system that can scale if needed. This approach, often called right-sizing, is fundamental to cost control.

Leveraging Containerization and Orchestration

Containers, like Docker, are a perfect match for edge computing. They allow you to package your application and its dependencies into a lightweight, portable unit. This ensures consistency across different edge devices. It also simplifies deployments and updates.Moreover, container orchestration tools like Kubernetes (or its lightweight variants like K3s) can automate the management of your edge applications. They can handle scaling, load balancing, and failover automatically. This improves both the efficiency and the resilience of your system. For complex deployments, mastering Kubernetes resource tuning is essential for peak performance.

Implementing Power-Aware Computing

For battery-powered or off-grid devices, power is the most critical resource. Power-aware computing involves designing systems that can dynamically adjust their performance to save energy. This can be done at both the hardware and software levels.For example, a device could enter a low-power sleep state when not actively processing data. The CPU frequency can also be scaled down during periods of low activity. Your software can contribute by batching tasks and network communications. This allows the device’s radio and processor to remain in a sleep state for longer periods, drastically extending battery life.

The Financial Impact of Edge Efficiency

Efficiency isn’t just about technical excellence; it’s about financial viability. A well-designed, efficient edge architecture can deliver significant cost savings. It can also enable new business models that would be impossible with a traditional cloud-only approach.

Reducing Total Cost of Ownership (TCO)

An efficient edge system lowers TCO in several ways. Firstly, right-sizing hardware reduces initial capital expenditure. Secondly, lower power consumption leads to smaller electricity bills over the device’s lifetime. This is especially significant in large-scale deployments.Additionally, minimizing data transfer to the cloud cuts down on bandwidth and cloud ingress/egress fees. These fees can be a major, often unpredictable, expense. By processing more data locally, you gain control over these costs. A deep dive into these financial benefits can be found in our comprehensive guide to edge computing cost gains.

Unlocking New Revenue Streams

Beyond cost savings, edge efficiency enables innovation. Low-latency processing allows for real-time applications like industrial automation, autonomous vehicles, and augmented reality. These applications create new value and open up new markets.For example, a factory can use an efficient edge system to detect product defects in real-time on the assembly line. This reduces waste and improves quality, directly boosting the bottom line. Without the speed and efficiency of edge computing, this would not be feasible.

Frequently Asked Questions

What is the difference between edge and fog computing?

Edge computing and fog computing are closely related. However, there is a subtle difference. Edge computing typically refers to computation happening directly on or very near the device generating the data. Fog computing, on the other hand, introduces an intermediate layer between the edge and the cloud. This “fog” layer aggregates data from multiple edge devices before sending it to the cloud. You can think of the edge as the extreme boundary, while the fog is a layer just inside that boundary.

How does edge computing improve security?

Edge computing can enhance security in several ways. Firstly, by processing sensitive data locally, it reduces the amount of data that needs to be transmitted over the network. This minimizes the risk of interception. Secondly, it reduces the attack surface. Instead of a single, large cloud target, an attacker would need to compromise individual, distributed edge nodes. Finally, if a single edge device is compromised, the damage is often contained to that local environment, protecting the rest of the network.

How do I start optimizing my edge deployment?

The best place to start is with monitoring. You cannot optimize what you cannot measure. First, implement tools to track key metrics like CPU usage, memory consumption, power draw, and network latency on your edge devices. Then, analyze this data to identify bottlenecks and areas of waste. Start with the most significant issues—this is often called “picking the low-hanging fruit.” Even small, incremental improvements can lead to substantial gains when scaled across thousands of devices.